import pandas as pd

import numpy as np

import random

from ucimlrepo import fetch_ucirepo

import boto3

import json

from tqdm.auto import tqdm

import time

from sklearn.model_selection import train_test_split

from autogluon.tabular import TabularDataset, TabularPredictor

from sklearn.metrics import (

mean_squared_error, mean_absolute_error, r2_score, median_absolute_error,

accuracy_score, precision_score, recall_score, f1_score, confusion_matrix

)

from scipy.stats import pearsonr, spearmanr, wasserstein_distance

from statsmodels.stats.diagnostic import het_white

def calculate_regression_metrics(y_true, y_pred):

return {

'root_mean_squared_error': np.sqrt(mean_squared_error(y_true, y_pred)),

'mean_squared_error': mean_squared_error(y_true, y_pred),

'mean_absolute_error': mean_absolute_error(y_true, y_pred),

'r2': r2_score(y_true, y_pred),

'pearsonr': pearsonr(y_true, y_pred)[0],

'spearmanr': spearmanr(y_true, y_pred)[0],

'median_absolute_error': median_absolute_error(y_true, y_pred),

'earths_mover_distance': wasserstein_distance(y_true, y_pred)

}

def caluclate_classification_metrics(y_true, y_pred):

return {

'accuracy': accuracy_score(y_true, y_pred),

'precision': precision_score(y_true, y_pred, average='weighted'),

'recall': recall_score(y_true, y_pred, average='weighted'),

'f1_score': f1_score(y_true, y_pred, average='weighted'),

'confusion_matrix': confusion_matrix(y_true, y_pred)

}

def string_to_yearmon(date):

date = date.split()

date = date[0].split('/') + date[1].split(':')

date = date[2] + '-' + date[0].zfill(2) #+ '-' + date[1].zfill(2) + ' ' + date[3].zfill(2) + ':' + date[4].zfill(2)

return date

def call_llama(system_prompt, input):

template = f"""<s>[INST] <<SYS>>{system_prompt}<</SYS>>{input}[/INST]"""

client = boto3.client(service_name='bedrock-runtime',region_name='us-west-2')

body = json.dumps({

"prompt": template,

"temperature": 0.,

"top_p": 0.9,

"max_gen_len": 2048,

})

response = client.invoke_model(

body=body,

modelId='us.meta.llama3-2-90b-instruct-v1:0',

accept='application/json',

contentType='application/json'

)

response_body = json.loads(response['body'].read())

return response_body

def call_claude(system_prompt, input):

client = boto3.client(service_name='bedrock-runtime',region_name='us-west-2')

body=json.dumps(

{

"anthropic_version": "bedrock-2023-05-31",

"max_tokens": 2048,

"messages": [

{

"role": "user",

"content": [

{

"type": "text",

"text": system_prompt + '\n' + input,

}

]

}

]

}

)

response = client.invoke_model(body=body,

modelId='anthropic.claude-3-5-sonnet-20241022-v2:0',

contentType='application/json',

accept='application/json')

response_body = json.loads(response.get('body').read())

return response_body

from sklearn.preprocessing import StandardScaler

from statsmodels.stats.outliers_influence import variance_inflation_factor

from statsmodels.tools.tools import add_constant

class Winsorizer:

def __init__(self, cols, percentile=99):

self.cols = cols # List of columns to apply winsorization to

self.percentile = percentile # Percentile to define the outliers

self.lower_bounds = {} # To store the lower quantiles

self.upper_bounds = {} # To store the upper quantiles

def fit(self, df):

"""Fit the winsorizer to the data, remembering the quantiles."""

for col in self.cols:

lower = df[col].quantile(1 - self.percentile / 100)

upper = df[col].quantile(self.percentile / 100)

self.lower_bounds[col] = lower

self.upper_bounds[col] = upper

def transform(self, df):

"""Apply winsorization to a new DataFrame using the learned quantiles."""

for col in self.cols:

lower = self.lower_bounds[col]

upper = self.upper_bounds[col]

df[col] = np.clip(df[col], lower, upper)

return df

def fit_transform(self, df):

"""Fit the model and apply winsorization to the same DataFrame."""

self.fit(df)

return self.transform(df)

def calculate_vif(df, cols):

X = df[cols]

X_with_const = add_constant(X) # Add constant for VIF calculation

vif_data = pd.DataFrame()

vif_data['feature'] = X_with_const.columns

vif_data['VIF'] = [variance_inflation_factor(X_with_const.values, i) for i in range(X_with_const.shape[1])]

return vif_data

import seaborn as sns

import statsmodels.api as smPredict Zero-inflated and Long/fat-tailed Outcomes

This notebook details how to predict a real-number outcome that is zero-inflated and long/fat-tailed such as sales prediction in retail. We provide baseline regression, regression trained using winsorized outcome, regression trained on log(y+1) outcome, and hurdle regression with and without Duan’s method.

Import

Dataset

We use the UCI Online Retail dataset, which are transactions from a UK-based, non-store online retail from 2010-12-01 and 2011-12-09. We perform the following data processing:

- Remove transactions without

CustomerID; from 541,909 to 406,829 transactions - Filter out transactions where either

UnitPriceorQuantityis less than zero; from 406,829 to 397,884 transactions - Fill in missing product

Descriptionwith valueUNKNOWN.

online_retail = fetch_ucirepo(id=352)

transaction_df = online_retail['data']['original']

transaction_df.shape(541909, 8)#create yearmon for train-valid split

transaction_df['yearmon'] = transaction_df.InvoiceDate.map(string_to_yearmon)

#get rid of transactions without cid

transaction_df = transaction_df[~transaction_df.CustomerID.isna()].reset_index(drop=True)

#fill in unknown descriptions

transaction_df.Description = transaction_df.Description.fillna('UNKNOWN')

#convert customer id to string

transaction_df['CustomerID'] = transaction_df['CustomerID'].map(lambda x: str(int(x)))

transaction_df.shape(406829, 9)#check if still na

transaction_df.isna().mean()InvoiceNo 0.0

StockCode 0.0

Description 0.0

Quantity 0.0

InvoiceDate 0.0

UnitPrice 0.0

CustomerID 0.0

Country 0.0

yearmon 0.0

dtype: float64#simplify by filtering unit price and quantity to be non-zero (get rid of discounts, cancellations, etc)

transaction_df = transaction_df[(transaction_df.UnitPrice>0)&\

(transaction_df.Quantity>0)].reset_index(drop=True)

#add sales

transaction_df['Sales'] = transaction_df.UnitPrice * transaction_df.Quantity

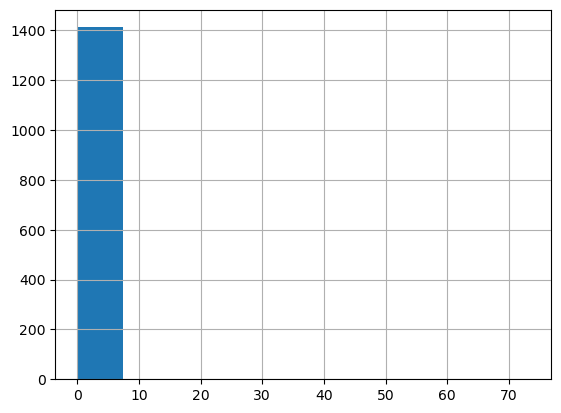

transaction_df.shape(397884, 10)Problem Formulation and Outcome

We formulate the problem as predicting the sales (TargetSales) during Q4 2011 for each customers who bought at least one item during Q1-Q3 2011. Note that we are interested in predicting the actual sales number per customer as accurately as possible; this is common for marketing use cases such as determining what spend threshold to give each customer in a promotion, targeting customers for upselling, or detecting early signs of churns.

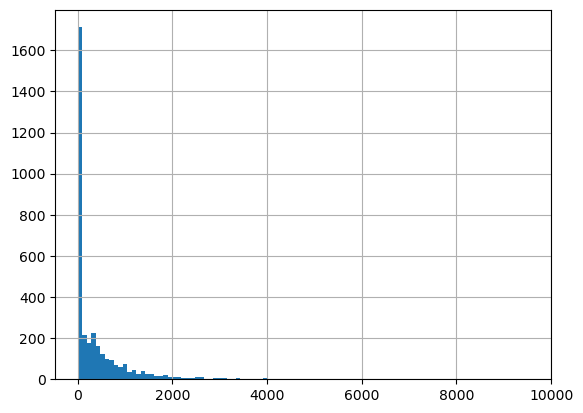

We transform the transaction dataset into a customer-level dataset where we calculate features using transactions between 2011-01 to 2011-09 and outcome using transactions between 2011-10 to 2011-12, summing Quantity times UnitPrice. We left-join the customers in feature set to outcome set. This will result in the zero-inflated nature of the outcome as not all customers will come back in Q4. The distribution of non-zero sales is naturally long/fat-tailed with a few customers having extraordinarily high amount of sales in Q4. This resulted in a customer-level dataset with 3,438 customers.

feature_period = {'start': '2011-01', 'end': '2011-09'}

outcome_period = {'start': '2011-10', 'end': '2011-12'}

feature_transaction = transaction_df[(transaction_df.yearmon>=feature_period['start'])&\

(transaction_df.yearmon<=feature_period['end'])]

outcome_transaction = transaction_df[(transaction_df.yearmon>=outcome_period['start'])&\

(transaction_df.yearmon<=outcome_period['end'])]

feature_transaction.shape, outcome_transaction.shape((240338, 10), (131389, 10))#aggregate sales during outcome period

outcome_sales = outcome_transaction.groupby('CustomerID').Sales.sum().reset_index()

outcome_sales| CustomerID | Sales | |

|---|---|---|

| 0 | 12347 | 1519.14 |

| 1 | 12349 | 1757.55 |

| 2 | 12352 | 311.73 |

| 3 | 12356 | 58.35 |

| 4 | 12357 | 6207.67 |

| ... | ... | ... |

| 2555 | 18276 | 335.86 |

| 2556 | 18277 | 110.38 |

| 2557 | 18282 | 77.84 |

| 2558 | 18283 | 974.21 |

| 2559 | 18287 | 1072.00 |

2560 rows × 2 columns

#aggregate sales during feature period

feature_sales = feature_transaction.groupby('CustomerID').Sales.sum().reset_index()

feature_sales| CustomerID | Sales | |

|---|---|---|

| 0 | 12346 | 77183.60 |

| 1 | 12347 | 2079.07 |

| 2 | 12348 | 904.44 |

| 3 | 12350 | 334.40 |

| 4 | 12352 | 2194.31 |

| ... | ... | ... |

| 3433 | 18280 | 180.60 |

| 3434 | 18281 | 80.82 |

| 3435 | 18282 | 100.21 |

| 3436 | 18283 | 1120.67 |

| 3437 | 18287 | 765.28 |

3438 rows × 2 columns

#merge to get TargetSales including those who spent during feature period but not during outcome (zeroes)

outcome_df = feature_sales[['CustomerID']].merge(outcome_sales, on='CustomerID', how='left')

outcome_df['Sales'] = outcome_df['Sales'].fillna(0)

outcome_df.columns = ['CustomerID', 'TargetSales']

outcome_df| CustomerID | TargetSales | |

|---|---|---|

| 0 | 12346 | 0.00 |

| 1 | 12347 | 1519.14 |

| 2 | 12348 | 0.00 |

| 3 | 12350 | 0.00 |

| 4 | 12352 | 311.73 |

| ... | ... | ... |

| 3433 | 18280 | 0.00 |

| 3434 | 18281 | 0.00 |

| 3435 | 18282 | 77.84 |

| 3436 | 18283 | 974.21 |

| 3437 | 18287 | 1072.00 |

3438 rows × 2 columns

#confirm zero-inflated, long/fat-tailed

outcome_df.TargetSales.describe(percentiles=[i/10 for i in range(10)])count 3438.000000

mean 666.245829

std 4016.843037

min 0.000000

0% 0.000000

10% 0.000000

20% 0.000000

30% 0.000000

40% 0.000000

50% 102.005000

60% 263.006000

70% 425.790000

80% 705.878000

90% 1273.611000

max 168469.600000

Name: TargetSales, dtype: float64#confirm zero-inflated, long/fat-tailed

outcome_df[outcome_df.TargetSales<=10_000].TargetSales.hist(bins=100)

Feature

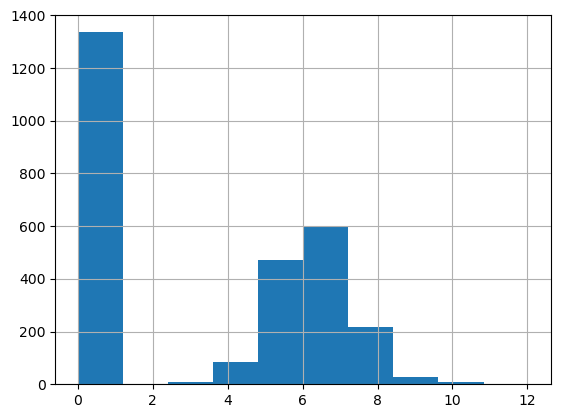

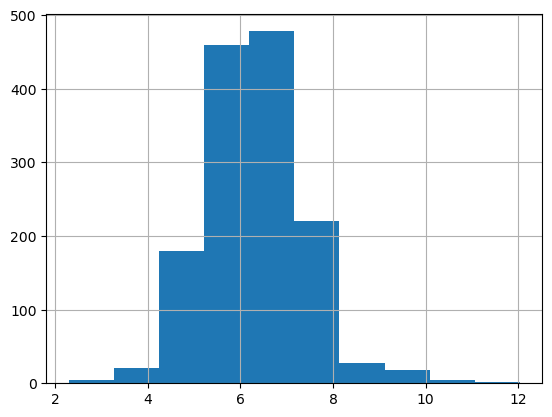

We represent a customer using traditional RFM features namely recency of purchase, purchase days, total sales, number of distinct products purchased, number of distinct category purchased, customer tenure within 2011, average purchase frequency, average purchase value, and percentage of purchase across all 9 categories. This is based on data from Q1-Q3 2011.

Since the UCI Online Retail dataset does not have a category but only contains descriptions over 3,000 items, we use LLaMA 3.2 90B to infer categories based on randomly selected 1,000 descriptions. This is to make the category preference representation for each customer, which is more tractable than including features about all 3,000+ items. After that, we use the same model to label a category for each description. The categories are:

- Home Decor

- Kitchen and Dining

- Fashion Accessories

- Stationary and Gifts

- Toys and Games

- Seasonal and Holiday

- Personal Care and Wellness

- Outdoor and Garden

- Others

Classify Description into Category

feature_transaction.Description.nunique()3548Get Category

descriptions = feature_transaction.Description.unique().tolist()

print(descriptions[:5])

#randomize descriptions with seed 112 to get which categories we should use

np.random.seed(112)

random_descriptions = np.random.choice(descriptions, 1000, replace=False)

print(random_descriptions[:5])['JUMBO BAG PINK POLKADOT', 'BLUE POLKADOT WRAP', 'RED RETROSPOT WRAP ', 'RECYCLING BAG RETROSPOT ', 'RED RETROSPOT SHOPPER BAG']

['MODERN FLORAL STATIONERY SET' 'PURPLE BERTIE GLASS BEAD BAG CHARM'

'PARTY INVITES SPACEMAN' 'MONTANA DIAMOND CLUSTER EARRINGS'

'SKULLS DESIGN COTTON TOTE BAG']# res = call_llama(

# 'You are a product categorization assistant at a retail website.',

# 'Given the following product descriptions, come up with a few product categories they should be classified into.'+'\n'.join(random_descriptions)

# )

# print(res['generation'])# res# res = call_claude(

# 'You are a product categorization assistant at a retail website.',

# 'Given the following product descriptions, come up with a few product categories they should be classified into.'+'\n'.join(random_descriptions)

# )

# print(res['content'][0]['text'])# resLLaMA 3.2 90B Output:

<<SYS>>Based on the product descriptions, I would categorize them into the following categories:

1. Home Decor:

* Wall art

* Decorative items (e.g. vases, figurines, etc.)

* Lighting (e.g. candles, lanterns, etc.)

* Textiles (e.g. throw pillows, blankets, etc.)

2. Kitchen and Dining:

* Cookware and utensils

* Tableware (e.g. plates, cups, etc.)

* Kitchen decor (e.g. signs, magnets, etc.)

* Food and drink items (e.g. tea, coffee, etc.)

3. Fashion and Accessories:

* Jewelry (e.g. necklaces, earrings, etc.)

* Handbags and wallets

* Clothing and accessories (e.g. scarves, hats, etc.)

4. Stationery and Gifts:

* Cards and gift wrap

* Stationery (e.g. notebooks, pens, etc.)

* Gift items (e.g. mugs, keychains, etc.)

5. Toys and Games:

* Toys (e.g. stuffed animals, puzzles, etc.)

* Games and puzzles

6. Seasonal and Holiday:

* Christmas decorations and gifts

* Easter decorations and gifts

* Other seasonal items (e.g. Halloween, etc.)

7. Personal Care and Wellness:

* Beauty and personal care items (e.g. skincare, haircare, etc.)

* Wellness and self-care items (e.g. essential oils, etc.)

8. Outdoor and Garden:

* Garden decor and accessories

* Outdoor furniture and decor

* Gardening tools and supplies

Note that some products may fit into multiple categories, but I have assigned them to the one that seems most relevant.Claude 3.5 v2 Output

Based on these product descriptions, I would suggest the following main product categories:

1. Home Decor

- Candle holders

- Picture frames

- Wall art & signs

- Clocks

- Cushions & covers

- Storage items

- Decorative objects

2. Jewelry & Accessories

- Necklaces

- Bracelets

- Earrings

- Hair accessories

- Bag charms

- Key rings

3. Garden & Outdoor

- Plant pots

- Garden tools

- Outdoor decorations

- Bird houses

- Garden markers

4. Kitchen & Dining

- Tea sets

- Mugs

- Kitchen storage

- Cutlery

- Baking accessories

- Tea towels

5. Stationery & Paper Goods

- Notebooks

- Gift wrap

- Cards

- Paper decorations

- Writing sets

6. Party & Celebrations

- Party supplies

- Gift bags

- Christmas decorations

- Easter items

- Birthday items

7. Children's Items

- Toys

- Children's tableware

- School supplies

- Kids' accessories

8. Fashion Accessories

- Bags

- Purses

- Scarves

- Travel accessories

9. Bath & Beauty

- Bathroom accessories

- Toiletry bags

- Beauty items

10. Lighting

- Lamps

- String lights

- Tea lights

- Lanterns

These categories cover the main types of products in the list while providing logical groupings for customers to browse.categories = [

'Home Decor',

'Kitchen and Dining',

'Fashion Accessories',

'Stationary and Gifts',

'Toys and Games',

'Seasonal and Holiday',

'Personal Care and Wellness',

'Outdoor and Garden',

]

len(categories)8Annotate Category to Description

# #loop through descriptions in batches of batch_size

# res_texts = []

# batch_size = 100

# for i in tqdm(range(0, len(descriptions), batch_size)):

# batch = descriptions[i:i+batch_size]

# d = "\n".join(batch)

# inp = f'''Categorize the following product descriptions into {", ".join(categories)} or Others, if they do not fall into any.

# Only answer in the following format:

# "product description of product #1"|"product category classified into"

# "product description of product #2"|"product category classified into"

# ...

# "product description of product #n"|"product category classified into"

# Here are the product descriptions:

# {d}

# '''

# while True:

# res = call_claude('You are a product categorizer at a retail website', inp)

# # if res['generation_token_count'] > 1: #for llama

# if res['usage']['output_tokens'] > 1:

# break

# else:

# print('Retrying...')

# time.sleep(2)

# res_text = res['content'][0]['text'].strip().split('\n')

# #for llama

# # .replace('[SYS]','').replace('<<SYS>>','')\

# # .replace('[/SYS]','').replace('<</SYS>>','')\

# if res_text!='':

# res_texts.extend(res_text)# with open('../data/sales_prediction/product_description_category.csv','w') as f:

# f.write('"product_description"|"category"\n')

# for i in res_texts:

# f.write(f'{i}\n')product_description_category = pd.read_csv('../data/sales_prediction/product_description_category.csv',

sep='|')

#clean product_description

product_description_category['Description'] = descriptions

product_description_category.category.value_counts(normalize=True)category

Home Decor 0.328636

Kitchen and Dining 0.195885

Fashion Accessories 0.138670

Stationary and Gifts 0.116122

Seasonal and Holiday 0.087373

Personal Care and Wellness 0.047351

Toys and Games 0.045096

Outdoor and Garden 0.032976

Others 0.007892

Name: proportion, dtype: float64feature_transaction_cat = feature_transaction.merge(product_description_category,

how='inner',

on = 'Description',)

feature_transaction.shape, feature_transaction_cat.shape((240338, 10), (240338, 12))RFM

#convert invoice date to datetime

feature_transaction_cat['InvoiceDate'] = pd.to_datetime(feature_transaction_cat['InvoiceDate'])

# last date in feature set

current_date = feature_transaction_cat['InvoiceDate'].max()

#rfm

customer_features = feature_transaction_cat.groupby('CustomerID').agg({

'InvoiceDate': [

('recency', lambda x: (current_date - x.max()).days),

('first_purchase_date', 'min'),

('purchase_day', 'nunique'),

],

'InvoiceNo': [('nb_invoice', 'nunique')],

'Sales': [

('total_sales', 'sum')

],

'StockCode': [('nb_product', 'nunique')],

'category': [('nb_category', 'nunique')]

}).reset_index()

# Flatten column names

customer_features.columns = [

'CustomerID',

'recency',

'first_purchase_date',

'purchase_day',

'nb_invoice',

'total_sales',

'nb_product',

'nb_category'

]#almost always one purchase a day

(customer_features.purchase_day==customer_features.nb_invoice).mean()0.977021524141943customer_features['customer_lifetime'] = (current_date - customer_features['first_purchase_date']).dt.days

customer_features['avg_purchase_frequency'] = customer_features['customer_lifetime'] / customer_features['purchase_day']

customer_features['avg_purchase_value'] = customer_features['total_sales'] / customer_features['purchase_day']Category Preference

#category preference

category_sales = feature_transaction_cat.pivot_table(

values='Sales',

index='CustomerID',

columns='category',

aggfunc='sum',

fill_value=0

)

category_sales.columns = [i.lower().replace(' ','_') for i in category_sales.columns]

customer_features = customer_features.merge(category_sales, on='CustomerID', how='left')

total_sales = customer_features['total_sales']

for col in category_sales.columns:

percentage_col = f'per_{col}'

customer_features[percentage_col] = customer_features[col] / total_sales#make sure the categories are not too sparse

(customer_features.iloc[:,-9:]==0).mean()per_fashion_accessories 0.409831

per_home_decor 0.081734

per_kitchen_and_dining 0.122455

per_others 0.765561

per_outdoor_and_garden 0.507853

per_personal_care_and_wellness 0.448226

per_seasonal_and_holiday 0.369401

per_stationary_and_gifts 0.305410

per_toys_and_games 0.487202

dtype: float64Putting Them All Together

selected_features = [

'recency',

'purchase_day',

'total_sales',

'nb_product',

'nb_category',

'customer_lifetime',

'avg_purchase_frequency',

'avg_purchase_value',

'per_fashion_accessories',

'per_home_decor',

'per_kitchen_and_dining',

'per_others',

'per_outdoor_and_garden',

'per_personal_care_and_wellness',

'per_seasonal_and_holiday',

'per_stationary_and_gifts',

'per_toys_and_games']

outcome_variable = 'TargetSales'customer_features = customer_features[[ 'CustomerID']+selected_features]

customer_features.head()| CustomerID | recency | purchase_day | total_sales | nb_product | nb_category | customer_lifetime | avg_purchase_frequency | avg_purchase_value | per_fashion_accessories | per_home_decor | per_kitchen_and_dining | per_others | per_outdoor_and_garden | per_personal_care_and_wellness | per_seasonal_and_holiday | per_stationary_and_gifts | per_toys_and_games | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 12346 | 255 | 1 | 77183.60 | 1 | 1 | 255 | 255.000000 | 77183.600000 | 0.000000 | 0.000000 | 1.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| 1 | 12347 | 59 | 4 | 2079.07 | 65 | 7 | 247 | 61.750000 | 519.767500 | 0.145834 | 0.204168 | 0.294021 | 0.000000 | 0.005628 | 0.147614 | 0.000000 | 0.073013 | 0.129721 |

| 2 | 12348 | 5 | 3 | 904.44 | 10 | 4 | 248 | 82.666667 | 301.480000 | 0.000000 | 0.000000 | 0.000000 | 0.132679 | 0.000000 | 0.825970 | 0.018796 | 0.022555 | 0.000000 |

| 3 | 12350 | 239 | 1 | 334.40 | 17 | 7 | 239 | 239.000000 | 334.400000 | 0.240431 | 0.202751 | 0.116926 | 0.172548 | 0.000000 | 0.118421 | 0.000000 | 0.059211 | 0.089713 |

| 4 | 12352 | 2 | 7 | 2194.31 | 47 | 8 | 226 | 32.285714 | 313.472857 | 0.000000 | 0.196531 | 0.246187 | 0.474090 | 0.013535 | 0.016680 | 0.008066 | 0.024404 | 0.020508 |

Merge Features and Outcome

customer_features.shape, outcome_df.shape((3438, 18), (3438, 2))df = outcome_df.merge(customer_features, on='CustomerID').drop('CustomerID', axis=1)

df.shape(3438, 18)#correlations

df.iloc[:,1:].corr()| recency | purchase_day | total_sales | nb_product | nb_category | customer_lifetime | avg_purchase_frequency | avg_purchase_value | per_fashion_accessories | per_home_decor | per_kitchen_and_dining | per_others | per_outdoor_and_garden | per_personal_care_and_wellness | per_seasonal_and_holiday | per_stationary_and_gifts | per_toys_and_games | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| recency | 1.000000 | -0.299308 | -0.132344 | -0.287415 | -0.326772 | 0.298853 | 0.893973 | 0.008823 | -0.020861 | 0.022013 | 0.057244 | -0.016069 | 0.071268 | -0.082792 | -0.085681 | -0.017813 | -0.009686 |

| purchase_day | -0.299308 | 1.000000 | 0.540253 | 0.690345 | 0.304621 | 0.332109 | -0.331543 | 0.027488 | 0.030683 | 0.018684 | 0.025269 | 0.004299 | -0.019992 | -0.035665 | -0.020392 | -0.045384 | -0.028187 |

| total_sales | -0.132344 | 0.540253 | 1.000000 | 0.400467 | 0.137064 | 0.156018 | -0.148762 | 0.361138 | 0.016511 | -0.013819 | 0.047834 | 0.006398 | -0.029353 | -0.011937 | -0.016724 | -0.029181 | -0.013139 |

| nb_product | -0.287415 | 0.690345 | 0.400467 | 1.000000 | 0.555551 | 0.265594 | -0.294923 | 0.061039 | -0.003137 | -0.017516 | 0.035615 | -0.006842 | -0.026371 | -0.005309 | -0.016586 | 0.026716 | -0.010069 |

| nb_category | -0.326772 | 0.304621 | 0.137064 | 0.555551 | 1.000000 | 0.224232 | -0.321596 | 0.019955 | 0.004863 | -0.138372 | -0.039363 | 0.055555 | 0.041405 | 0.075882 | 0.015498 | 0.152869 | 0.111150 |

| customer_lifetime | 0.298853 | 0.332109 | 0.156018 | 0.265594 | 0.224232 | 1.000000 | 0.358431 | 0.014933 | 0.011220 | 0.066111 | 0.069175 | -0.019971 | 0.029726 | -0.127865 | -0.120399 | -0.050320 | -0.036484 |

| avg_purchase_frequency | 0.893973 | -0.331543 | -0.148762 | -0.294923 | -0.321596 | 0.358431 | 1.000000 | 0.009157 | -0.016093 | 0.027208 | 0.037053 | -0.027413 | 0.060369 | -0.070352 | -0.074799 | -0.000546 | -0.010612 |

| avg_purchase_value | 0.008823 | 0.027488 | 0.361138 | 0.061039 | 0.019955 | 0.014933 | 0.009157 | 1.000000 | -0.003187 | -0.056690 | 0.076862 | 0.015427 | -0.028884 | 0.004225 | -0.000200 | -0.012729 | -0.002396 |

| per_fashion_accessories | -0.020861 | 0.030683 | 0.016511 | -0.003137 | 0.004863 | 0.011220 | -0.016093 | -0.003187 | 1.000000 | -0.254015 | -0.177775 | -0.010436 | -0.082834 | -0.038493 | -0.124719 | -0.068166 | -0.051486 |

| per_home_decor | 0.022013 | 0.018684 | -0.013819 | -0.017516 | -0.138372 | 0.066111 | 0.027208 | -0.056690 | -0.254015 | 1.000000 | -0.481983 | -0.155784 | -0.080637 | -0.158837 | -0.165964 | -0.262313 | -0.245759 |

| per_kitchen_and_dining | 0.057244 | 0.025269 | 0.047834 | 0.035615 | -0.039363 | 0.069175 | 0.037053 | 0.076862 | -0.177775 | -0.481983 | 1.000000 | -0.013075 | -0.144698 | -0.117031 | -0.204235 | -0.173386 | -0.143931 |

| per_others | -0.016069 | 0.004299 | 0.006398 | -0.006842 | 0.055555 | -0.019971 | -0.027413 | 0.015427 | -0.010436 | -0.155784 | -0.013075 | 1.000000 | -0.062652 | 0.014794 | -0.047940 | -0.033975 | -0.040421 |

| per_outdoor_and_garden | 0.071268 | -0.019992 | -0.029353 | -0.026371 | 0.041405 | 0.029726 | 0.060369 | -0.028884 | -0.082834 | -0.080637 | -0.144698 | -0.062652 | 1.000000 | -0.045639 | -0.077947 | -0.057297 | -0.001034 |

| per_personal_care_and_wellness | -0.082792 | -0.035665 | -0.011937 | -0.005309 | 0.075882 | -0.127865 | -0.070352 | 0.004225 | -0.038493 | -0.158837 | -0.117031 | 0.014794 | -0.045639 | 1.000000 | -0.057926 | -0.025871 | -0.017022 |

| per_seasonal_and_holiday | -0.085681 | -0.020392 | -0.016724 | -0.016586 | 0.015498 | -0.120399 | -0.074799 | -0.000200 | -0.124719 | -0.165964 | -0.204235 | -0.047940 | -0.077947 | -0.057926 | 1.000000 | -0.019418 | -0.042970 |

| per_stationary_and_gifts | -0.017813 | -0.045384 | -0.029181 | 0.026716 | 0.152869 | -0.050320 | -0.000546 | -0.012729 | -0.068166 | -0.262313 | -0.173386 | -0.033975 | -0.057297 | -0.025871 | -0.019418 | 1.000000 | 0.172039 |

| per_toys_and_games | -0.009686 | -0.028187 | -0.013139 | -0.010069 | 0.111150 | -0.036484 | -0.010612 | -0.002396 | -0.051486 | -0.245759 | -0.143931 | -0.040421 | -0.001034 | -0.017022 | -0.042970 | 0.172039 | 1.000000 |

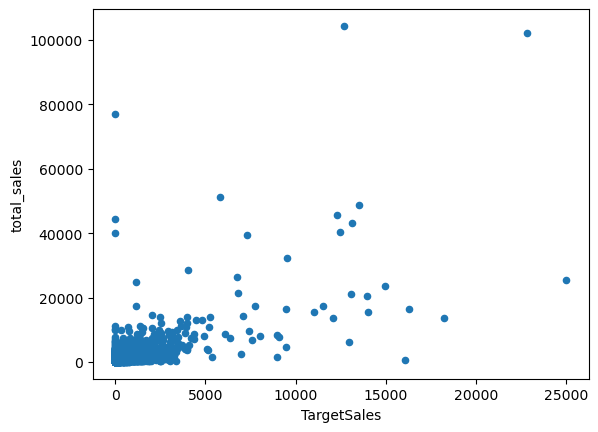

#target and most predictive variable

df[df.TargetSales<=25_000].plot.scatter(x='TargetSales',y='total_sales')

Train-Test Splits

We randomly split the dataset into train and test sets at 80/20 ratio. We also confirm the distribution of TargetSales is similar across percentiles and only different at the upper end.

#split into train-valid sets

train_df, test_df = train_test_split(df,

test_size=0.2,

random_state=112)pd.concat([train_df.TargetSales.describe(percentiles=[i/10 for i in range(10)]).reset_index(),

test_df.TargetSales.describe(percentiles=[i/10 for i in range(10)]).reset_index(),], axis=1)| index | TargetSales | index | TargetSales | |

|---|---|---|---|---|

| 0 | count | 2750.000000 | count | 688.000000 |

| 1 | mean | 642.650436 | mean | 760.558808 |

| 2 | std | 4015.305436 | std | 4024.524400 |

| 3 | min | 0.000000 | min | 0.000000 |

| 4 | 0% | 0.000000 | 0% | 0.000000 |

| 5 | 10% | 0.000000 | 10% | 0.000000 |

| 6 | 20% | 0.000000 | 20% | 0.000000 |

| 7 | 30% | 0.000000 | 30% | 0.000000 |

| 8 | 40% | 0.000000 | 40% | 0.000000 |

| 9 | 50% | 91.350000 | 50% | 113.575000 |

| 10 | 60% | 260.308000 | 60% | 277.836000 |

| 11 | 70% | 426.878000 | 70% | 418.187000 |

| 12 | 80% | 694.164000 | 80% | 759.582000 |

| 13 | 90% | 1272.997000 | 90% | 1255.670000 |

| 14 | max | 168469.600000 | max | 77099.380000 |

Baseline Regression

The most naive solution is to predict TargetSales based on the features. We use a stacked ensemble of LightGBM, CatBoost, XGBoost, Random Forest and Extra Trees via AutoGluon. We train with good_quality preset, stated to be “Stronger than any other AutoML Framework”, for speedy training and inference but feel free to try more performant option. We exclude the neural-network models as they require further preprocessing of the features.

We use an industry-grade, non-parametric model to be as close to a real use case as possible and make a point that our methodology works not only in a toy-dataset setup.

preset = 'good_quality'predictor = TabularPredictor(label='TargetSales').fit(train_df[selected_features + ['TargetSales']],

presets=preset,

excluded_model_types=['NN_TORCH','FASTAI','KNN'],

)No path specified. Models will be saved in: "AutogluonModels/ag-20241214_134505"

Verbosity: 2 (Standard Logging)

=================== System Info ===================

AutoGluon Version: 1.1.1

Python Version: 3.9.12

Operating System: Linux

Platform Machine: x86_64

Platform Version: #1 SMP Wed Oct 23 01:22:11 UTC 2024

CPU Count: 64

Memory Avail: 470.24 GB / 480.23 GB (97.9%)

Disk Space Avail: 1451.64 GB / 1968.52 GB (73.7%)

===================================================

Presets specified: ['good_quality']

Setting dynamic_stacking from 'auto' to True. Reason: Enable dynamic_stacking when use_bag_holdout is disabled. (use_bag_holdout=False)

Stack configuration (auto_stack=True): num_stack_levels=1, num_bag_folds=8, num_bag_sets=1

Note: `save_bag_folds=False`! This will greatly reduce peak disk usage during fit (by ~8x), but runs the risk of an out-of-memory error during model refit if memory is small relative to the data size.

You can avoid this risk by setting `save_bag_folds=True`.

DyStack is enabled (dynamic_stacking=True). AutoGluon will try to determine whether the input data is affected by stacked overfitting and enable or disable stacking as a consequence.

This is used to identify the optimal `num_stack_levels` value. Copies of AutoGluon will be fit on subsets of the data. Then holdout validation data is used to detect stacked overfitting.

Running DyStack for up to 900s of the 3600s of remaining time (25%).

2024-12-14 13:45:05,288 INFO util.py:154 -- Outdated packages:

ipywidgets==7.6.5 found, needs ipywidgets>=8

Run `pip install -U ipywidgets`, then restart the notebook server for rich notebook output.

/home/charipol/miniconda3/lib/python3.9/site-packages/autogluon/tabular/predictor/predictor.py:1242: UserWarning: Failed to use ray for memory safe fits. Falling back to normal fit. Error: ValueError('ray==2.40.0 detected. 2.10.0 <= ray < 2.11.0 is required. You can use pip to install certain version of ray `pip install ray==2.10.0` ')

stacked_overfitting = self._sub_fit_memory_save_wrapper(

Context path: "AutogluonModels/ag-20241214_134505/ds_sub_fit/sub_fit_ho"

Running DyStack sub-fit ...

Beginning AutoGluon training ... Time limit = 900s

AutoGluon will save models to "AutogluonModels/ag-20241214_134505/ds_sub_fit/sub_fit_ho"

Train Data Rows: 2444

Train Data Columns: 17

Label Column: TargetSales

Problem Type: regression

Preprocessing data ...

Using Feature Generators to preprocess the data ...

Fitting AutoMLPipelineFeatureGenerator...

Available Memory: 481515.82 MB

Train Data (Original) Memory Usage: 0.32 MB (0.0% of available memory)

Inferring data type of each feature based on column values. Set feature_metadata_in to manually specify special dtypes of the features.

Stage 1 Generators:

Fitting AsTypeFeatureGenerator...

Stage 2 Generators:

Fitting FillNaFeatureGenerator...

Stage 3 Generators:

Fitting IdentityFeatureGenerator...

Stage 4 Generators:

Fitting DropUniqueFeatureGenerator...

Stage 5 Generators:

Fitting DropDuplicatesFeatureGenerator...

Types of features in original data (raw dtype, special dtypes):

('float', []) : 12 | ['total_sales', 'avg_purchase_frequency', 'avg_purchase_value', 'per_fashion_accessories', 'per_home_decor', ...]

('int', []) : 5 | ['recency', 'purchase_day', 'nb_product', 'nb_category', 'customer_lifetime']

Types of features in processed data (raw dtype, special dtypes):

('float', []) : 12 | ['total_sales', 'avg_purchase_frequency', 'avg_purchase_value', 'per_fashion_accessories', 'per_home_decor', ...]

('int', []) : 5 | ['recency', 'purchase_day', 'nb_product', 'nb_category', 'customer_lifetime']

0.0s = Fit runtime

17 features in original data used to generate 17 features in processed data.

Train Data (Processed) Memory Usage: 0.32 MB (0.0% of available memory)

Data preprocessing and feature engineering runtime = 0.06s ...

AutoGluon will gauge predictive performance using evaluation metric: 'root_mean_squared_error'

This metric's sign has been flipped to adhere to being higher_is_better. The metric score can be multiplied by -1 to get the metric value.

To change this, specify the eval_metric parameter of Predictor()

User-specified model hyperparameters to be fit:

{

'NN_TORCH': {},

'GBM': [{'extra_trees': True, 'ag_args': {'name_suffix': 'XT'}}, {}, 'GBMLarge'],

'CAT': {},

'XGB': {},

'FASTAI': {},

'RF': [{'criterion': 'gini', 'max_depth': 15, 'ag_args': {'name_suffix': 'Gini', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'entropy', 'max_depth': 15, 'ag_args': {'name_suffix': 'Entr', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'squared_error', 'max_depth': 15, 'ag_args': {'name_suffix': 'MSE', 'problem_types': ['regression', 'quantile']}}],

'XT': [{'criterion': 'gini', 'max_depth': 15, 'ag_args': {'name_suffix': 'Gini', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'entropy', 'max_depth': 15, 'ag_args': {'name_suffix': 'Entr', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'squared_error', 'max_depth': 15, 'ag_args': {'name_suffix': 'MSE', 'problem_types': ['regression', 'quantile']}}],

}

AutoGluon will fit 2 stack levels (L1 to L2) ...

Excluded models: ['NN_TORCH', 'FASTAI'] (Specified by `excluded_model_types`)

Fitting 7 L1 models ...

Fitting model: LightGBMXT_BAG_L1 ... Training model for up to 599.62s of the 899.66s of remaining time.

Will use sequential fold fitting strategy because import of ray failed. Reason: ray==2.40.0 detected. 2.10.0 <= ray < 2.11.0 is required. You can use pip to install certain version of ray `pip install ray==2.10.0`

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-3990.4801 = Validation score (-root_mean_squared_error)

6.36s = Training runtime

0.01s = Validation runtime

Fitting model: LightGBM_BAG_L1 ... Training model for up to 593.17s of the 893.2s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-3921.7042 = Validation score (-root_mean_squared_error)

5.31s = Training runtime

0.01s = Validation runtime

Fitting model: RandomForestMSE_BAG_L1 ... Training model for up to 587.75s of the 887.78s of remaining time.

-4516.1791 = Validation score (-root_mean_squared_error)

0.89s = Training runtime

0.17s = Validation runtime

Fitting model: CatBoost_BAG_L1 ... Training model for up to 586.59s of the 886.62s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-3857.3111 = Validation score (-root_mean_squared_error)

10.49s = Training runtime

0.02s = Validation runtime

Fitting model: ExtraTreesMSE_BAG_L1 ... Training model for up to 575.99s of the 876.03s of remaining time.

-3900.3038 = Validation score (-root_mean_squared_error)

0.66s = Training runtime

0.17s = Validation runtime

Fitting model: XGBoost_BAG_L1 ... Training model for up to 575.08s of the 875.11s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-3941.3599 = Validation score (-root_mean_squared_error)

6.1s = Training runtime

0.03s = Validation runtime

Fitting model: LightGBMLarge_BAG_L1 ... Training model for up to 568.89s of the 868.93s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-3912.54 = Validation score (-root_mean_squared_error)

12.28s = Training runtime

0.01s = Validation runtime

Fitting model: WeightedEnsemble_L2 ... Training model for up to 360.0s of the 856.55s of remaining time.

Ensemble Weights: {'CatBoost_BAG_L1': 0.6, 'ExtraTreesMSE_BAG_L1': 0.36, 'LightGBM_BAG_L1': 0.04}

-3835.4224 = Validation score (-root_mean_squared_error)

0.02s = Training runtime

0.0s = Validation runtime

Excluded models: ['NN_TORCH', 'FASTAI'] (Specified by `excluded_model_types`)

Fitting 7 L2 models ...

Fitting model: LightGBMXT_BAG_L2 ... Training model for up to 856.47s of the 856.47s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-3941.7891 = Validation score (-root_mean_squared_error)

4.54s = Training runtime

0.01s = Validation runtime

Fitting model: LightGBM_BAG_L2 ... Training model for up to 851.87s of the 851.86s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy[1000] valid_set's rmse: 4314.99 -3894.7078 = Validation score (-root_mean_squared_error)

5.58s = Training runtime

0.01s = Validation runtime

Fitting model: RandomForestMSE_BAG_L2 ... Training model for up to 846.2s of the 846.19s of remaining time.

-4525.2057 = Validation score (-root_mean_squared_error)

0.87s = Training runtime

0.17s = Validation runtime

Fitting model: CatBoost_BAG_L2 ... Training model for up to 845.08s of the 845.07s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-3904.7749 = Validation score (-root_mean_squared_error)

5.45s = Training runtime

0.02s = Validation runtime

Fitting model: ExtraTreesMSE_BAG_L2 ... Training model for up to 839.51s of the 839.5s of remaining time.

-3952.2022 = Validation score (-root_mean_squared_error)

0.68s = Training runtime

0.17s = Validation runtime

Fitting model: XGBoost_BAG_L2 ... Training model for up to 838.57s of the 838.56s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-3929.2019 = Validation score (-root_mean_squared_error)

6.6s = Training runtime

0.04s = Validation runtime

Fitting model: LightGBMLarge_BAG_L2 ... Training model for up to 831.87s of the 831.86s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-3912.6409 = Validation score (-root_mean_squared_error)

13.59s = Training runtime

0.01s = Validation runtime

Fitting model: WeightedEnsemble_L3 ... Training model for up to 360.0s of the 818.17s of remaining time.

Ensemble Weights: {'CatBoost_BAG_L1': 0.36, 'ExtraTreesMSE_BAG_L1': 0.32, 'LightGBM_BAG_L2': 0.32}

-3823.1639 = Validation score (-root_mean_squared_error)

0.03s = Training runtime

0.0s = Validation runtime

AutoGluon training complete, total runtime = 81.64s ... Best model: WeightedEnsemble_L3 | Estimated inference throughput: 2283.2 rows/s (306 batch size)

Automatically performing refit_full as a post-fit operation (due to `.fit(..., refit_full=True)`

Refitting models via `predictor.refit_full` using all of the data (combined train and validation)...

Models trained in this way will have the suffix "_FULL" and have NaN validation score.

This process is not bound by time_limit, but should take less time than the original `predictor.fit` call.

To learn more, refer to the `.refit_full` method docstring which explains how "_FULL" models differ from normal models.

Fitting 1 L1 models ...

Fitting model: LightGBMXT_BAG_L1_FULL ...

0.25s = Training runtime

Fitting 1 L1 models ...

Fitting model: LightGBM_BAG_L1_FULL ...

0.21s = Training runtime

Fitting model: RandomForestMSE_BAG_L1_FULL | Skipping fit via cloning parent ...

0.89s = Training runtime

0.17s = Validation runtime

Fitting 1 L1 models ...

Fitting model: CatBoost_BAG_L1_FULL ...

0.6s = Training runtime

Fitting model: ExtraTreesMSE_BAG_L1_FULL | Skipping fit via cloning parent ...

0.66s = Training runtime

0.17s = Validation runtime

Fitting 1 L1 models ...

Fitting model: XGBoost_BAG_L1_FULL ...

0.12s = Training runtime

Fitting 1 L1 models ...

Fitting model: LightGBMLarge_BAG_L1_FULL ...

0.34s = Training runtime

Fitting model: WeightedEnsemble_L2_FULL | Skipping fit via cloning parent ...

Ensemble Weights: {'CatBoost_BAG_L1': 0.6, 'ExtraTreesMSE_BAG_L1': 0.36, 'LightGBM_BAG_L1': 0.04}

0.02s = Training runtime

Fitting 1 L2 models ...

Fitting model: LightGBMXT_BAG_L2_FULL ...

0.21s = Training runtime

Fitting 1 L2 models ...

Fitting model: LightGBM_BAG_L2_FULL ...

0.31s = Training runtime

Fitting model: RandomForestMSE_BAG_L2_FULL | Skipping fit via cloning parent ...

0.87s = Training runtime

0.17s = Validation runtime

Fitting 1 L2 models ...

Fitting model: CatBoost_BAG_L2_FULL ...

0.17s = Training runtime

Fitting model: ExtraTreesMSE_BAG_L2_FULL | Skipping fit via cloning parent ...

0.68s = Training runtime

0.17s = Validation runtime

Fitting 1 L2 models ...

Fitting model: XGBoost_BAG_L2_FULL ...

0.19s = Training runtime

Fitting 1 L2 models ...

Fitting model: LightGBMLarge_BAG_L2_FULL ...

0.43s = Training runtime

Fitting model: WeightedEnsemble_L3_FULL | Skipping fit via cloning parent ...

Ensemble Weights: {'CatBoost_BAG_L1': 0.36, 'ExtraTreesMSE_BAG_L1': 0.32, 'LightGBM_BAG_L2': 0.32}

0.03s = Training runtime

Updated best model to "WeightedEnsemble_L3_FULL" (Previously "WeightedEnsemble_L3"). AutoGluon will default to using "WeightedEnsemble_L3_FULL" for predict() and predict_proba().

Refit complete, total runtime = 3.45s ... Best model: "WeightedEnsemble_L3_FULL"

TabularPredictor saved. To load, use: predictor = TabularPredictor.load("AutogluonModels/ag-20241214_134505/ds_sub_fit/sub_fit_ho")

Deleting DyStack predictor artifacts (clean_up_fits=True) ...

Leaderboard on holdout data (DyStack):

model score_holdout score_val eval_metric pred_time_test pred_time_val fit_time pred_time_test_marginal pred_time_val_marginal fit_time_marginal stack_level can_infer fit_order

0 CatBoost_BAG_L1_FULL -803.899801 -3857.311112 root_mean_squared_error 0.006679 NaN 0.597072 0.006679 NaN 0.597072 1 True 4

1 WeightedEnsemble_L2_FULL -813.259482 -3835.422447 root_mean_squared_error 0.120649 NaN 1.485833 0.002517 NaN 0.018860 2 True 8

2 CatBoost_BAG_L2_FULL -838.233626 -3904.774934 root_mean_squared_error 0.258556 NaN 3.238374 0.006184 NaN 0.173173 2 True 12

3 RandomForestMSE_BAG_L1_FULL -847.825565 -4516.179095 root_mean_squared_error 0.113628 0.174859 0.894842 0.113628 0.174859 0.894842 1 True 3

4 ExtraTreesMSE_BAG_L2_FULL -890.912998 -3952.202176 root_mean_squared_error 0.360469 NaN 3.743573 0.108097 0.171160 0.678372 2 True 13

5 ExtraTreesMSE_BAG_L1_FULL -922.896541 -3900.303809 root_mean_squared_error 0.109015 0.173588 0.658955 0.109015 0.173588 0.658955 1 True 5

6 WeightedEnsemble_L3_FULL -977.887954 -3823.163850 root_mean_squared_error 0.260014 NaN 3.409128 0.003530 NaN 0.031533 3 True 16

7 LightGBM_BAG_L1_FULL -1086.123687 -3921.704247 root_mean_squared_error 0.002438 NaN 0.210945 0.002438 NaN 0.210945 1 True 2

8 RandomForestMSE_BAG_L2_FULL -1090.066132 -4525.205744 root_mean_squared_error 0.349192 NaN 3.933684 0.096820 0.174712 0.868483 2 True 11

9 LightGBMXT_BAG_L1_FULL -1230.340360 -3990.480139 root_mean_squared_error 0.002607 NaN 0.245293 0.002607 NaN 0.245293 1 True 1

10 LightGBMXT_BAG_L2_FULL -1234.815155 -3941.789134 root_mean_squared_error 0.255407 NaN 3.276018 0.003035 NaN 0.210817 2 True 9

11 LightGBMLarge_BAG_L1_FULL -1345.024278 -3912.540001 root_mean_squared_error 0.004740 NaN 0.335057 0.004740 NaN 0.335057 1 True 7

12 LightGBMLarge_BAG_L2_FULL -1640.347524 -3912.640942 root_mean_squared_error 0.262513 NaN 3.497248 0.010141 NaN 0.432046 2 True 15

13 LightGBM_BAG_L2_FULL -1743.255667 -3894.707823 root_mean_squared_error 0.256483 NaN 3.377595 0.004111 NaN 0.312394 2 True 10

14 XGBoost_BAG_L1_FULL -2245.433966 -3941.359884 root_mean_squared_error 0.013265 NaN 0.123036 0.013265 NaN 0.123036 1 True 6

15 XGBoost_BAG_L2_FULL -2454.083373 -3929.201875 root_mean_squared_error 0.267445 NaN 3.256454 0.015073 NaN 0.191253 2 True 14

0 = Optimal num_stack_levels (Stacked Overfitting Occurred: True)

86s = DyStack runtime | 3514s = Remaining runtime

Starting main fit with num_stack_levels=0.

For future fit calls on this dataset, you can skip DyStack to save time: `predictor.fit(..., dynamic_stacking=False, num_stack_levels=0)`

Beginning AutoGluon training ... Time limit = 3514s

AutoGluon will save models to "AutogluonModels/ag-20241214_134505"

Train Data Rows: 2750

Train Data Columns: 17

Label Column: TargetSales

Problem Type: regression

Preprocessing data ...

Using Feature Generators to preprocess the data ...

Fitting AutoMLPipelineFeatureGenerator...

Available Memory: 480433.27 MB

Train Data (Original) Memory Usage: 0.36 MB (0.0% of available memory)

Inferring data type of each feature based on column values. Set feature_metadata_in to manually specify special dtypes of the features.

Stage 1 Generators:

Fitting AsTypeFeatureGenerator...

Stage 2 Generators:

Fitting FillNaFeatureGenerator...

Stage 3 Generators:

Fitting IdentityFeatureGenerator...

Stage 4 Generators:

Fitting DropUniqueFeatureGenerator...

Stage 5 Generators:

Fitting DropDuplicatesFeatureGenerator...

Types of features in original data (raw dtype, special dtypes):

('float', []) : 12 | ['total_sales', 'avg_purchase_frequency', 'avg_purchase_value', 'per_fashion_accessories', 'per_home_decor', ...]

('int', []) : 5 | ['recency', 'purchase_day', 'nb_product', 'nb_category', 'customer_lifetime']

Types of features in processed data (raw dtype, special dtypes):

('float', []) : 12 | ['total_sales', 'avg_purchase_frequency', 'avg_purchase_value', 'per_fashion_accessories', 'per_home_decor', ...]

('int', []) : 5 | ['recency', 'purchase_day', 'nb_product', 'nb_category', 'customer_lifetime']

0.1s = Fit runtime

17 features in original data used to generate 17 features in processed data.

Train Data (Processed) Memory Usage: 0.36 MB (0.0% of available memory)

Data preprocessing and feature engineering runtime = 0.08s ...

AutoGluon will gauge predictive performance using evaluation metric: 'root_mean_squared_error'

This metric's sign has been flipped to adhere to being higher_is_better. The metric score can be multiplied by -1 to get the metric value.

To change this, specify the eval_metric parameter of Predictor()

User-specified model hyperparameters to be fit:

{

'NN_TORCH': {},

'GBM': [{'extra_trees': True, 'ag_args': {'name_suffix': 'XT'}}, {}, 'GBMLarge'],

'CAT': {},

'XGB': {},

'FASTAI': {},

'RF': [{'criterion': 'gini', 'max_depth': 15, 'ag_args': {'name_suffix': 'Gini', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'entropy', 'max_depth': 15, 'ag_args': {'name_suffix': 'Entr', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'squared_error', 'max_depth': 15, 'ag_args': {'name_suffix': 'MSE', 'problem_types': ['regression', 'quantile']}}],

'XT': [{'criterion': 'gini', 'max_depth': 15, 'ag_args': {'name_suffix': 'Gini', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'entropy', 'max_depth': 15, 'ag_args': {'name_suffix': 'Entr', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'squared_error', 'max_depth': 15, 'ag_args': {'name_suffix': 'MSE', 'problem_types': ['regression', 'quantile']}}],

}

Excluded models: ['NN_TORCH', 'FASTAI'] (Specified by `excluded_model_types`)

Fitting 7 L1 models ...

Fitting model: LightGBMXT_BAG_L1 ... Training model for up to 3513.91s of the 3513.9s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy[1000] valid_set's rmse: 9800.07

[2000] valid_set's rmse: 9792.42

[3000] valid_set's rmse: 9791.47 -3713.1197 = Validation score (-root_mean_squared_error)

8.47s = Training runtime

0.01s = Validation runtime

Fitting model: LightGBM_BAG_L1 ... Training model for up to 3505.33s of the 3505.33s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy[1000] valid_set's rmse: 9561.64

[2000] valid_set's rmse: 9538.68 -3635.1505 = Validation score (-root_mean_squared_error)

6.15s = Training runtime

0.01s = Validation runtime

Fitting model: RandomForestMSE_BAG_L1 ... Training model for up to 3499.08s of the 3499.08s of remaining time.

-4135.0334 = Validation score (-root_mean_squared_error)

0.82s = Training runtime

0.18s = Validation runtime

Fitting model: CatBoost_BAG_L1 ... Training model for up to 3497.99s of the 3497.99s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-3669.0125 = Validation score (-root_mean_squared_error)

18.54s = Training runtime

0.02s = Validation runtime

Fitting model: ExtraTreesMSE_BAG_L1 ... Training model for up to 3479.34s of the 3479.34s of remaining time.

-3678.3921 = Validation score (-root_mean_squared_error)

0.66s = Training runtime

0.18s = Validation runtime

Fitting model: XGBoost_BAG_L1 ... Training model for up to 3478.41s of the 3478.41s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-3785.5048 = Validation score (-root_mean_squared_error)

5.79s = Training runtime

0.04s = Validation runtime

Fitting model: LightGBMLarge_BAG_L1 ... Training model for up to 3472.52s of the 3472.51s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-3704.5742 = Validation score (-root_mean_squared_error)

11.91s = Training runtime

0.01s = Validation runtime

Fitting model: WeightedEnsemble_L2 ... Training model for up to 360.0s of the 3460.51s of remaining time.

Ensemble Weights: {'LightGBM_BAG_L1': 0.5, 'ExtraTreesMSE_BAG_L1': 0.35, 'CatBoost_BAG_L1': 0.15}

-3608.5561 = Validation score (-root_mean_squared_error)

0.02s = Training runtime

0.0s = Validation runtime

AutoGluon training complete, total runtime = 53.55s ... Best model: WeightedEnsemble_L2 | Estimated inference throughput: 6308.2 rows/s (344 batch size)

Automatically performing refit_full as a post-fit operation (due to `.fit(..., refit_full=True)`

Refitting models via `predictor.refit_full` using all of the data (combined train and validation)...

Models trained in this way will have the suffix "_FULL" and have NaN validation score.

This process is not bound by time_limit, but should take less time than the original `predictor.fit` call.

To learn more, refer to the `.refit_full` method docstring which explains how "_FULL" models differ from normal models.

Fitting 1 L1 models ...

Fitting model: LightGBMXT_BAG_L1_FULL ...

0.57s = Training runtime

Fitting 1 L1 models ...

Fitting model: LightGBM_BAG_L1_FULL ...

0.36s = Training runtime

Fitting model: RandomForestMSE_BAG_L1_FULL | Skipping fit via cloning parent ...

0.82s = Training runtime

0.18s = Validation runtime

Fitting 1 L1 models ...

Fitting model: CatBoost_BAG_L1_FULL ...

0.81s = Training runtime

Fitting model: ExtraTreesMSE_BAG_L1_FULL | Skipping fit via cloning parent ...

0.66s = Training runtime

0.18s = Validation runtime

Fitting 1 L1 models ...

Fitting model: XGBoost_BAG_L1_FULL ...

0.16s = Training runtime

Fitting 1 L1 models ...

Fitting model: LightGBMLarge_BAG_L1_FULL ...

0.29s = Training runtime

Fitting model: WeightedEnsemble_L2_FULL | Skipping fit via cloning parent ...

Ensemble Weights: {'LightGBM_BAG_L1': 0.5, 'ExtraTreesMSE_BAG_L1': 0.35, 'CatBoost_BAG_L1': 0.15}

0.02s = Training runtime

Updated best model to "WeightedEnsemble_L2_FULL" (Previously "WeightedEnsemble_L2"). AutoGluon will default to using "WeightedEnsemble_L2_FULL" for predict() and predict_proba().

Refit complete, total runtime = 2.49s ... Best model: "WeightedEnsemble_L2_FULL"

TabularPredictor saved. To load, use: predictor = TabularPredictor.load("AutogluonModels/ag-20241214_134505")test_df['pred_baseline'] = predictor.predict(test_df[selected_features])metric_baseline = calculate_regression_metrics(test_df['TargetSales'], test_df['pred_baseline'])

metric_baseline['model'] = 'baseline'

metric_baseline{'root_mean_squared_error': 3162.478744240967,

'mean_squared_error': 10001271.807775924,

'mean_absolute_error': 715.6442657130541,

'r2': 0.3816166296854987,

'pearsonr': 0.6190719671013133,

'spearmanr': 0.47008461549340863,

'median_absolute_error': 232.98208312988282,

'earths_mover_distance': 287.77728784026124,

'model': 'baseline'}Regression on Winsorized Outcome

One possible approach to deal with long/fat-tailed outcome is to train on a winsorized outcome. This may lead to better performance when tested on a winsorized outcome but not so much on original outcome.

outlier_per = 0.99

outlier_cap_train = train_df['TargetSales'].quantile(outlier_per)

outlier_cap_train7180.805199999947#winsorize

train_df['TargetSales_win'] = train_df['TargetSales'].map(lambda x: outlier_cap_train if x> outlier_cap_train else x)

test_df['TargetSales_win'] = test_df['TargetSales'].map(lambda x: outlier_cap_train if x> outlier_cap_train else x)predictor = TabularPredictor(label='TargetSales_win').fit(train_df[selected_features+['TargetSales_win']],

presets=preset,

excluded_model_types=['NN_TORCH','FASTAI','KNN'],

)No path specified. Models will be saved in: "AutogluonModels/ag-20241214_134727"

Verbosity: 2 (Standard Logging)

=================== System Info ===================

AutoGluon Version: 1.1.1

Python Version: 3.9.12

Operating System: Linux

Platform Machine: x86_64

Platform Version: #1 SMP Wed Oct 23 01:22:11 UTC 2024

CPU Count: 64

Memory Avail: 468.94 GB / 480.23 GB (97.6%)

Disk Space Avail: 1451.56 GB / 1968.52 GB (73.7%)

===================================================

Presets specified: ['good_quality']

Setting dynamic_stacking from 'auto' to True. Reason: Enable dynamic_stacking when use_bag_holdout is disabled. (use_bag_holdout=False)

Stack configuration (auto_stack=True): num_stack_levels=1, num_bag_folds=8, num_bag_sets=1

Note: `save_bag_folds=False`! This will greatly reduce peak disk usage during fit (by ~8x), but runs the risk of an out-of-memory error during model refit if memory is small relative to the data size.

You can avoid this risk by setting `save_bag_folds=True`.

DyStack is enabled (dynamic_stacking=True). AutoGluon will try to determine whether the input data is affected by stacked overfitting and enable or disable stacking as a consequence.

This is used to identify the optimal `num_stack_levels` value. Copies of AutoGluon will be fit on subsets of the data. Then holdout validation data is used to detect stacked overfitting.

Running DyStack for up to 900s of the 3600s of remaining time (25%).

/home/charipol/miniconda3/lib/python3.9/site-packages/autogluon/tabular/predictor/predictor.py:1242: UserWarning: Failed to use ray for memory safe fits. Falling back to normal fit. Error: ValueError('ray==2.40.0 detected. 2.10.0 <= ray < 2.11.0 is required. You can use pip to install certain version of ray `pip install ray==2.10.0` ')

stacked_overfitting = self._sub_fit_memory_save_wrapper(

Context path: "AutogluonModels/ag-20241214_134727/ds_sub_fit/sub_fit_ho"

Running DyStack sub-fit ...

Beginning AutoGluon training ... Time limit = 900s

AutoGluon will save models to "AutogluonModels/ag-20241214_134727/ds_sub_fit/sub_fit_ho"

Train Data Rows: 2444

Train Data Columns: 17

Label Column: TargetSales_win

Problem Type: regression

Preprocessing data ...

Using Feature Generators to preprocess the data ...

Fitting AutoMLPipelineFeatureGenerator...

Available Memory: 480196.12 MB

Train Data (Original) Memory Usage: 0.32 MB (0.0% of available memory)

Inferring data type of each feature based on column values. Set feature_metadata_in to manually specify special dtypes of the features.

Stage 1 Generators:

Fitting AsTypeFeatureGenerator...

Stage 2 Generators:

Fitting FillNaFeatureGenerator...

Stage 3 Generators:

Fitting IdentityFeatureGenerator...

Stage 4 Generators:

Fitting DropUniqueFeatureGenerator...

Stage 5 Generators:

Fitting DropDuplicatesFeatureGenerator...

Types of features in original data (raw dtype, special dtypes):

('float', []) : 12 | ['total_sales', 'avg_purchase_frequency', 'avg_purchase_value', 'per_fashion_accessories', 'per_home_decor', ...]

('int', []) : 5 | ['recency', 'purchase_day', 'nb_product', 'nb_category', 'customer_lifetime']

Types of features in processed data (raw dtype, special dtypes):

('float', []) : 12 | ['total_sales', 'avg_purchase_frequency', 'avg_purchase_value', 'per_fashion_accessories', 'per_home_decor', ...]

('int', []) : 5 | ['recency', 'purchase_day', 'nb_product', 'nb_category', 'customer_lifetime']

0.1s = Fit runtime

17 features in original data used to generate 17 features in processed data.

Train Data (Processed) Memory Usage: 0.32 MB (0.0% of available memory)

Data preprocessing and feature engineering runtime = 0.07s ...

AutoGluon will gauge predictive performance using evaluation metric: 'root_mean_squared_error'

This metric's sign has been flipped to adhere to being higher_is_better. The metric score can be multiplied by -1 to get the metric value.

To change this, specify the eval_metric parameter of Predictor()

User-specified model hyperparameters to be fit:

{

'NN_TORCH': {},

'GBM': [{'extra_trees': True, 'ag_args': {'name_suffix': 'XT'}}, {}, 'GBMLarge'],

'CAT': {},

'XGB': {},

'FASTAI': {},

'RF': [{'criterion': 'gini', 'max_depth': 15, 'ag_args': {'name_suffix': 'Gini', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'entropy', 'max_depth': 15, 'ag_args': {'name_suffix': 'Entr', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'squared_error', 'max_depth': 15, 'ag_args': {'name_suffix': 'MSE', 'problem_types': ['regression', 'quantile']}}],

'XT': [{'criterion': 'gini', 'max_depth': 15, 'ag_args': {'name_suffix': 'Gini', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'entropy', 'max_depth': 15, 'ag_args': {'name_suffix': 'Entr', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'squared_error', 'max_depth': 15, 'ag_args': {'name_suffix': 'MSE', 'problem_types': ['regression', 'quantile']}}],

}

AutoGluon will fit 2 stack levels (L1 to L2) ...

Excluded models: ['NN_TORCH', 'FASTAI'] (Specified by `excluded_model_types`)

Fitting 7 L1 models ...

Fitting model: LightGBMXT_BAG_L1 ... Training model for up to 599.8s of the 899.93s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-704.0735 = Validation score (-root_mean_squared_error)

4.98s = Training runtime

0.01s = Validation runtime

Fitting model: LightGBM_BAG_L1 ... Training model for up to 594.77s of the 894.89s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-700.8029 = Validation score (-root_mean_squared_error)

4.27s = Training runtime

0.01s = Validation runtime

Fitting model: RandomForestMSE_BAG_L1 ... Training model for up to 590.42s of the 890.54s of remaining time.

-708.5579 = Validation score (-root_mean_squared_error)

0.74s = Training runtime

0.18s = Validation runtime

Fitting model: CatBoost_BAG_L1 ... Training model for up to 589.41s of the 889.53s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-682.2162 = Validation score (-root_mean_squared_error)

6.8s = Training runtime

0.02s = Validation runtime

Fitting model: ExtraTreesMSE_BAG_L1 ... Training model for up to 582.52s of the 882.64s of remaining time.

-688.9972 = Validation score (-root_mean_squared_error)

0.64s = Training runtime

0.18s = Validation runtime

Fitting model: XGBoost_BAG_L1 ... Training model for up to 581.63s of the 881.75s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-710.5012 = Validation score (-root_mean_squared_error)

5.15s = Training runtime

0.03s = Validation runtime

Fitting model: LightGBMLarge_BAG_L1 ... Training model for up to 576.4s of the 876.52s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-715.783 = Validation score (-root_mean_squared_error)

16.49s = Training runtime

0.01s = Validation runtime

Fitting model: WeightedEnsemble_L2 ... Training model for up to 360.0s of the 859.93s of remaining time.

Ensemble Weights: {'CatBoost_BAG_L1': 0.5, 'ExtraTreesMSE_BAG_L1': 0.25, 'XGBoost_BAG_L1': 0.2, 'LightGBMXT_BAG_L1': 0.05}

-677.7482 = Validation score (-root_mean_squared_error)

0.03s = Training runtime

0.0s = Validation runtime

Excluded models: ['NN_TORCH', 'FASTAI'] (Specified by `excluded_model_types`)

Fitting 7 L2 models ...

Fitting model: LightGBMXT_BAG_L2 ... Training model for up to 859.84s of the 859.83s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-701.3347 = Validation score (-root_mean_squared_error)

5.45s = Training runtime

0.01s = Validation runtime

Fitting model: LightGBM_BAG_L2 ... Training model for up to 854.32s of the 854.31s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy[1000] valid_set's rmse: 622.335

[2000] valid_set's rmse: 619.896

[3000] valid_set's rmse: 619.36

[4000] valid_set's rmse: 619.228

[5000] valid_set's rmse: 619.165

[6000] valid_set's rmse: 619.15

[7000] valid_set's rmse: 619.144

[8000] valid_set's rmse: 619.142

[9000] valid_set's rmse: 619.14

[10000] valid_set's rmse: 619.14 -669.5782 = Validation score (-root_mean_squared_error)

16.28s = Training runtime

0.04s = Validation runtime

Fitting model: RandomForestMSE_BAG_L2 ... Training model for up to 837.9s of the 837.89s of remaining time.

-702.8194 = Validation score (-root_mean_squared_error)

0.88s = Training runtime

0.18s = Validation runtime

Fitting model: CatBoost_BAG_L2 ... Training model for up to 836.75s of the 836.74s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-679.668 = Validation score (-root_mean_squared_error)

13.36s = Training runtime

0.03s = Validation runtime

Fitting model: ExtraTreesMSE_BAG_L2 ... Training model for up to 823.27s of the 823.26s of remaining time.

-688.2802 = Validation score (-root_mean_squared_error)

0.68s = Training runtime

0.17s = Validation runtime

Fitting model: XGBoost_BAG_L2 ... Training model for up to 822.32s of the 822.31s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-706.5666 = Validation score (-root_mean_squared_error)

7.33s = Training runtime

0.03s = Validation runtime

Fitting model: LightGBMLarge_BAG_L2 ... Training model for up to 814.89s of the 814.88s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-701.7902 = Validation score (-root_mean_squared_error)

15.1s = Training runtime

0.01s = Validation runtime

Fitting model: WeightedEnsemble_L3 ... Training model for up to 360.0s of the 799.67s of remaining time.

Ensemble Weights: {'LightGBM_BAG_L2': 0.632, 'CatBoost_BAG_L1': 0.158, 'ExtraTreesMSE_BAG_L1': 0.105, 'XGBoost_BAG_L1': 0.105}

-664.9152 = Validation score (-root_mean_squared_error)

0.03s = Training runtime

0.0s = Validation runtime

AutoGluon training complete, total runtime = 100.43s ... Best model: WeightedEnsemble_L3 | Estimated inference throughput: 1980.5 rows/s (306 batch size)

Automatically performing refit_full as a post-fit operation (due to `.fit(..., refit_full=True)`

Refitting models via `predictor.refit_full` using all of the data (combined train and validation)...

Models trained in this way will have the suffix "_FULL" and have NaN validation score.

This process is not bound by time_limit, but should take less time than the original `predictor.fit` call.

To learn more, refer to the `.refit_full` method docstring which explains how "_FULL" models differ from normal models.

Fitting 1 L1 models ...

Fitting model: LightGBMXT_BAG_L1_FULL ...

0.32s = Training runtime

Fitting 1 L1 models ...

Fitting model: LightGBM_BAG_L1_FULL ...

0.23s = Training runtime

Fitting model: RandomForestMSE_BAG_L1_FULL | Skipping fit via cloning parent ...

0.74s = Training runtime

0.18s = Validation runtime

Fitting 1 L1 models ...

Fitting model: CatBoost_BAG_L1_FULL ...

0.29s = Training runtime

Fitting model: ExtraTreesMSE_BAG_L1_FULL | Skipping fit via cloning parent ...

0.64s = Training runtime

0.18s = Validation runtime

Fitting 1 L1 models ...

Fitting model: XGBoost_BAG_L1_FULL ...

0.08s = Training runtime

Fitting 1 L1 models ...

Fitting model: LightGBMLarge_BAG_L1_FULL ...

0.82s = Training runtime

Fitting model: WeightedEnsemble_L2_FULL | Skipping fit via cloning parent ...

Ensemble Weights: {'CatBoost_BAG_L1': 0.5, 'ExtraTreesMSE_BAG_L1': 0.25, 'XGBoost_BAG_L1': 0.2, 'LightGBMXT_BAG_L1': 0.05}

0.03s = Training runtime

Fitting 1 L2 models ...

Fitting model: LightGBMXT_BAG_L2_FULL ...

0.33s = Training runtime

Fitting 1 L2 models ...

Fitting model: LightGBM_BAG_L2_FULL ...

1.57s = Training runtime

Fitting model: RandomForestMSE_BAG_L2_FULL | Skipping fit via cloning parent ...

0.88s = Training runtime

0.18s = Validation runtime

Fitting 1 L2 models ...

Fitting model: CatBoost_BAG_L2_FULL ...

0.62s = Training runtime

Fitting model: ExtraTreesMSE_BAG_L2_FULL | Skipping fit via cloning parent ...

0.68s = Training runtime

0.17s = Validation runtime

Fitting 1 L2 models ...

Fitting model: XGBoost_BAG_L2_FULL ...

0.24s = Training runtime

Fitting 1 L2 models ...

Fitting model: LightGBMLarge_BAG_L2_FULL ...

0.68s = Training runtime

Fitting model: WeightedEnsemble_L3_FULL | Skipping fit via cloning parent ...

Ensemble Weights: {'LightGBM_BAG_L2': 0.632, 'CatBoost_BAG_L1': 0.158, 'ExtraTreesMSE_BAG_L1': 0.105, 'XGBoost_BAG_L1': 0.105}

0.03s = Training runtime

Updated best model to "WeightedEnsemble_L3_FULL" (Previously "WeightedEnsemble_L3"). AutoGluon will default to using "WeightedEnsemble_L3_FULL" for predict() and predict_proba().

Refit complete, total runtime = 5.9s ... Best model: "WeightedEnsemble_L3_FULL"

TabularPredictor saved. To load, use: predictor = TabularPredictor.load("AutogluonModels/ag-20241214_134727/ds_sub_fit/sub_fit_ho")

Deleting DyStack predictor artifacts (clean_up_fits=True) ...

Leaderboard on holdout data (DyStack):

model score_holdout score_val eval_metric pred_time_test pred_time_val fit_time pred_time_test_marginal pred_time_val_marginal fit_time_marginal stack_level can_infer fit_order

0 XGBoost_BAG_L2_FULL -673.227470 -706.566643 root_mean_squared_error 0.261725 NaN 3.356293 0.014406 NaN 0.243477 2 True 14

1 CatBoost_BAG_L2_FULL -685.276375 -679.668006 root_mean_squared_error 0.253890 NaN 3.729317 0.006571 NaN 0.616501 2 True 12

2 ExtraTreesMSE_BAG_L2_FULL -686.432329 -688.280166 root_mean_squared_error 0.384582 NaN 3.796237 0.137263 0.174881 0.683421 2 True 13

3 WeightedEnsemble_L2_FULL -687.292057 -677.748155 root_mean_squared_error 0.129074 NaN 1.357070 0.002933 NaN 0.026227 2 True 8

4 CatBoost_BAG_L1_FULL -688.830702 -682.216238 root_mean_squared_error 0.008315 NaN 0.291358 0.008315 NaN 0.291358 1 True 4

5 RandomForestMSE_BAG_L2_FULL -690.155342 -702.819447 root_mean_squared_error 0.358528 NaN 3.991187 0.111209 0.176151 0.878371 2 True 11

6 LightGBMLarge_BAG_L2_FULL -699.457560 -701.790157 root_mean_squared_error 0.256358 NaN 3.792534 0.009039 NaN 0.679718 2 True 15

7 WeightedEnsemble_L3_FULL -699.646914 -664.915201 root_mean_squared_error 0.269660 NaN 4.711405 0.004106 NaN 0.030340 3 True 16

8 RandomForestMSE_BAG_L1_FULL -700.107179 -708.557877 root_mean_squared_error 0.111719 0.175315 0.737258 0.111719 0.175315 0.737258 1 True 3

9 ExtraTreesMSE_BAG_L1_FULL -701.853556 -688.997247 root_mean_squared_error 0.105658 0.176891 0.644825 0.105658 0.176891 0.644825 1 True 5

10 XGBoost_BAG_L1_FULL -717.776000 -710.501170 root_mean_squared_error 0.008964 NaN 0.078643 0.008964 NaN 0.078643 1 True 6

11 LightGBMXT_BAG_L2_FULL -723.560168 -701.334719 root_mean_squared_error 0.251497 NaN 3.444983 0.004178 NaN 0.332167 2 True 9

12 LightGBM_BAG_L1_FULL -726.112842 -700.802863 root_mean_squared_error 0.002110 NaN 0.227411 0.002110 NaN 0.227411 1 True 2

13 LightGBM_BAG_L2_FULL -728.829307 -669.578190 root_mean_squared_error 0.265554 NaN 4.681065 0.018236 NaN 1.568249 2 True 10

14 LightGBMXT_BAG_L1_FULL -733.594747 -704.073534 root_mean_squared_error 0.003205 NaN 0.316018 0.003205 NaN 0.316018 1 True 1

15 LightGBMLarge_BAG_L1_FULL -766.964045 -715.782974 root_mean_squared_error 0.007349 NaN 0.817303 0.007349 NaN 0.817303 1 True 7

0 = Optimal num_stack_levels (Stacked Overfitting Occurred: True)

107s = DyStack runtime | 3493s = Remaining runtime

Starting main fit with num_stack_levels=0.

For future fit calls on this dataset, you can skip DyStack to save time: `predictor.fit(..., dynamic_stacking=False, num_stack_levels=0)`

Beginning AutoGluon training ... Time limit = 3493s

AutoGluon will save models to "AutogluonModels/ag-20241214_134727"

Train Data Rows: 2750

Train Data Columns: 17

Label Column: TargetSales_win

Problem Type: regression

Preprocessing data ...

Using Feature Generators to preprocess the data ...

Fitting AutoMLPipelineFeatureGenerator...

Available Memory: 479639.42 MB

Train Data (Original) Memory Usage: 0.36 MB (0.0% of available memory)

Inferring data type of each feature based on column values. Set feature_metadata_in to manually specify special dtypes of the features.

Stage 1 Generators:

Fitting AsTypeFeatureGenerator...

Stage 2 Generators:

Fitting FillNaFeatureGenerator...

Stage 3 Generators:

Fitting IdentityFeatureGenerator...

Stage 4 Generators:

Fitting DropUniqueFeatureGenerator...

Stage 5 Generators:

Fitting DropDuplicatesFeatureGenerator...

Types of features in original data (raw dtype, special dtypes):

('float', []) : 12 | ['total_sales', 'avg_purchase_frequency', 'avg_purchase_value', 'per_fashion_accessories', 'per_home_decor', ...]

('int', []) : 5 | ['recency', 'purchase_day', 'nb_product', 'nb_category', 'customer_lifetime']

Types of features in processed data (raw dtype, special dtypes):

('float', []) : 12 | ['total_sales', 'avg_purchase_frequency', 'avg_purchase_value', 'per_fashion_accessories', 'per_home_decor', ...]

('int', []) : 5 | ['recency', 'purchase_day', 'nb_product', 'nb_category', 'customer_lifetime']

0.1s = Fit runtime

17 features in original data used to generate 17 features in processed data.

Train Data (Processed) Memory Usage: 0.36 MB (0.0% of available memory)

Data preprocessing and feature engineering runtime = 0.08s ...

AutoGluon will gauge predictive performance using evaluation metric: 'root_mean_squared_error'

This metric's sign has been flipped to adhere to being higher_is_better. The metric score can be multiplied by -1 to get the metric value.

To change this, specify the eval_metric parameter of Predictor()

User-specified model hyperparameters to be fit:

{

'NN_TORCH': {},

'GBM': [{'extra_trees': True, 'ag_args': {'name_suffix': 'XT'}}, {}, 'GBMLarge'],

'CAT': {},

'XGB': {},

'FASTAI': {},

'RF': [{'criterion': 'gini', 'max_depth': 15, 'ag_args': {'name_suffix': 'Gini', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'entropy', 'max_depth': 15, 'ag_args': {'name_suffix': 'Entr', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'squared_error', 'max_depth': 15, 'ag_args': {'name_suffix': 'MSE', 'problem_types': ['regression', 'quantile']}}],

'XT': [{'criterion': 'gini', 'max_depth': 15, 'ag_args': {'name_suffix': 'Gini', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'entropy', 'max_depth': 15, 'ag_args': {'name_suffix': 'Entr', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'squared_error', 'max_depth': 15, 'ag_args': {'name_suffix': 'MSE', 'problem_types': ['regression', 'quantile']}}],

}

Excluded models: ['NN_TORCH', 'FASTAI'] (Specified by `excluded_model_types`)

Fitting 7 L1 models ...

Fitting model: LightGBMXT_BAG_L1 ... Training model for up to 3492.88s of the 3492.88s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-710.5609 = Validation score (-root_mean_squared_error)

5.2s = Training runtime

0.01s = Validation runtime

Fitting model: LightGBM_BAG_L1 ... Training model for up to 3487.59s of the 3487.59s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-696.6213 = Validation score (-root_mean_squared_error)

4.91s = Training runtime

0.01s = Validation runtime

Fitting model: RandomForestMSE_BAG_L1 ... Training model for up to 3482.58s of the 3482.58s of remaining time.

-706.2702 = Validation score (-root_mean_squared_error)

0.77s = Training runtime

0.18s = Validation runtime

Fitting model: CatBoost_BAG_L1 ... Training model for up to 3481.53s of the 3481.53s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-668.1395 = Validation score (-root_mean_squared_error)

8.92s = Training runtime

0.02s = Validation runtime

Fitting model: ExtraTreesMSE_BAG_L1 ... Training model for up to 3472.5s of the 3472.5s of remaining time.

-688.8913 = Validation score (-root_mean_squared_error)

0.62s = Training runtime

0.18s = Validation runtime

Fitting model: XGBoost_BAG_L1 ... Training model for up to 3471.6s of the 3471.6s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-699.3326 = Validation score (-root_mean_squared_error)

5.72s = Training runtime

0.04s = Validation runtime

Fitting model: LightGBMLarge_BAG_L1 ... Training model for up to 3465.77s of the 3465.77s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with SequentialLocalFoldFittingStrategy

-714.8496 = Validation score (-root_mean_squared_error)

14.1s = Training runtime

0.01s = Validation runtime

Fitting model: WeightedEnsemble_L2 ... Training model for up to 360.0s of the 3451.57s of remaining time.

Ensemble Weights: {'CatBoost_BAG_L1': 0.833, 'XGBoost_BAG_L1': 0.125, 'ExtraTreesMSE_BAG_L1': 0.042}

-667.3394 = Validation score (-root_mean_squared_error)

0.02s = Training runtime

0.0s = Validation runtime